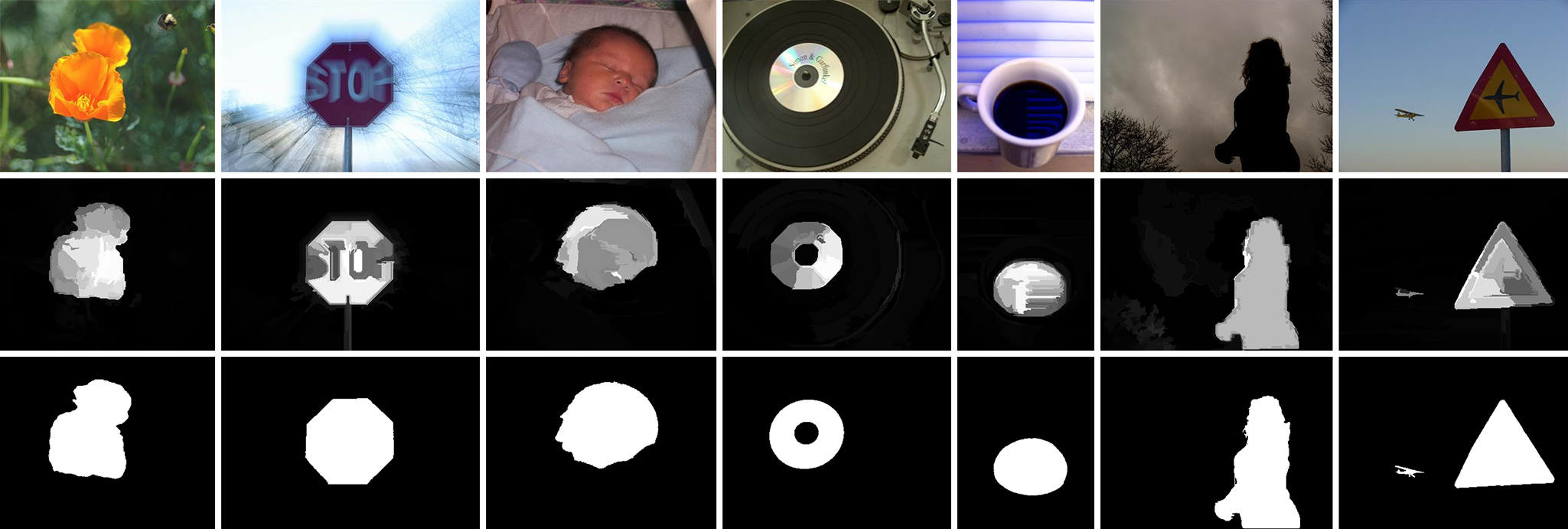

Figure 1. Saliency maps vs. ground truth. Given several original images [20] (top), our saliency detection method is used to generate saliency maps by measuring regional principal color contrasts (middle), which are comparable to manually labeled ground truth [11] (bottom).

|

Saliency detection is widely used in many visual applications like image segmentation, object recognition and classification. In this paper, we will introduce a new method to detect salient objects in natural images. The approach is based on a regional principal color contrast modal, which incorporates low-level and medium-level visual cues. The method allows a simple computation of color features and two categories of spatial relationships to a saliency map, achieving higher F-measure rates. At the same time, we present an interpolation approach to evaluate resulting curves, and analyze parameters selection. Our method enables the effective computation of arbitrary resolution images. Experimental results on a saliency database show that our approach produces high quality saliency maps and performs favorably against ten saliency detection algorithms.

-

Jing Lou, Mingwu Ren*, Huan Wang, ŌĆ£Regional Principal Color Based Saliency Detection,ŌĆØ PLoS ONE, vol. 9, no. 11, pp. e112475: 1ŌĆō13, 2014. doi:10.1371/journal.pone.0112475 PDF Bib MATLAB Code Slides (in Chinese)

-

The original SED2 is used for segmentation evaluation, where the ground truth segmentations of each input image are obtained by 1~3 different human subjects. The zip file SED2_GT includes the MATLAB code and binary masks for saliency evaluation. If you use this code or data, please cite the above paper.

-

We provide the saliency maps of the proposed RPC method on the following benchmark datasets. The zip files can be downloaded from GitHub below or Baidu Cloud. We also provide two evaluation measures including Precision-Recall and F-measure in each zip file, please see the help text in the PlotPRF script. If you use any part of our saliency maps or evaluation measures, please cite our paper.

Dataset Images Saliency Maps References ASD (MSRA1000

/MSRA1K)1000 ASD_RPC • T. Liu, J. Sun, N.-N. Zheng, X. Tang, and H.-Y. Shum, ŌĆ£Learning to detect a salient object,ŌĆØ in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2007, pp. 1–8.

• R. Achanta, S. Hemami, F. Estrada, and S. Süsstrunk, ŌĆ£Frequency-tuned salient region detection,ŌĆØ in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2009, pp. 1597–1604.DUTOMRON 5166 DUTOMRON_RPC • C. Yang, L. Zhang, H. Lu, X. Ruan, and M.-H. Yang, ŌĆ£Saliency detection via graph-based manifold ranking,ŌĆØ in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2013, pp. 3166–3173. ECSSD 1000 ECSSD_RPC • Q. Yan, L. Xu, J. Shi, and J. Jia, ŌĆ£Hierarchical saliency detection,ŌĆØ in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2013, pp. 1155–1162.

• J. Shi, Q. Yan, L. Xu, and J. Jia, ŌĆ£Hierarchical image saliency detection on extended CSSD,ŌĆØ IEEE Trans. Pattern Anal. Mach. Intell., vol. 38, no. 4, pp. 717–729, 2016.ImgSal 235 ImgSal_RPC • J. Li, M. D. Levine, X. An, and H. He, ŌĆ£Saliency Detection Based on Frequency and Spatial Domain Analysis,ŌĆØ in Proc. Br. Mach. Vis. Conf., 2011, pp. 86: 1–11.

• J. Li, M. D. Levine, X. An, X. Xu, and H. He, ŌĆ£Visual saliency based on scale-space analysis in the frequency domain,ŌĆØ IEEE Trans. Pattern Anal. Mach. Intell., vol. 35, no. 4, pp. 996–1010, 2013.JuddDB 900 JuddDB_RPC • T. Judd, K. Ehinger, F. Durand, and A. Torralba, ŌĆ£Learning to predict where humans look,ŌĆØ in Proc. IEEE Int. Conf. Comput. Vis., 2009, pp. 2106–2113.

• A. Borji, ŌĆ£What is a salient object? A dataset and a baseline model for salient object detection,ŌĆØ IEEE Trans. Image Process., vol. 24, no. 2, pp. 742–756, 2015.MSRA10K 10000 MSRA10K_RPC • T. Liu, J. Sun, N.-N. Zheng, X. Tang, and H.-Y. Shum, ŌĆ£Learning to detect a salient object,ŌĆØ in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2007, pp. 1–8.

• R. Achanta, S. Hemami, F. Estrada, and S. Süsstrunk, ŌĆ£Frequency-tuned salient region detection,ŌĆØ in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2009, pp. 1597–1604.

• M.-M. Cheng, N. J. Mitra, X. Huang, P. H. S. Torr, and S.-M. Hu, ŌĆ£Global contrast based salient region detection,ŌĆØ IEEE Trans. Pattern Anal. Mach. Intell., vol. 37, no. 3, pp. 569–582, 2015.SED2 100 SED2_RPC • S. Alpert, M. Galum, R. Basri, and A. Brandt, ŌĆ£Image segmentation by probabilistic bottom-up aggregation and cue integration,ŌĆØ in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., 2007, pp. 1–8.

• A. Borji, D. N. Sihite, and L. Itti, ŌĆ£Salient object detection: A benchmark,ŌĆØ in Proc. Eur. Conf. Comput. Vis., 2012, pp. 414–429.THUR15K 6232 THUR15K_RPC • M.-M. Cheng, N. J. Mitra, X. Huang, and S.-M. Hu, ŌĆ£SalientShape: Group saliency in image collections,ŌĆØ Vis. Comput., vol. 30, no. 4, pp. 443–453, 2014.

Figure 7. Visual results of our method compared with ground truth and other methods on dataset MSRA-1000. (A) Original images [20]. (B) Ground truth [11]. (C) IT [1]. (D) SR [14]. (E) FT [11]. (F) CA [19]. (G) RC [10]. (H) Ours.

|

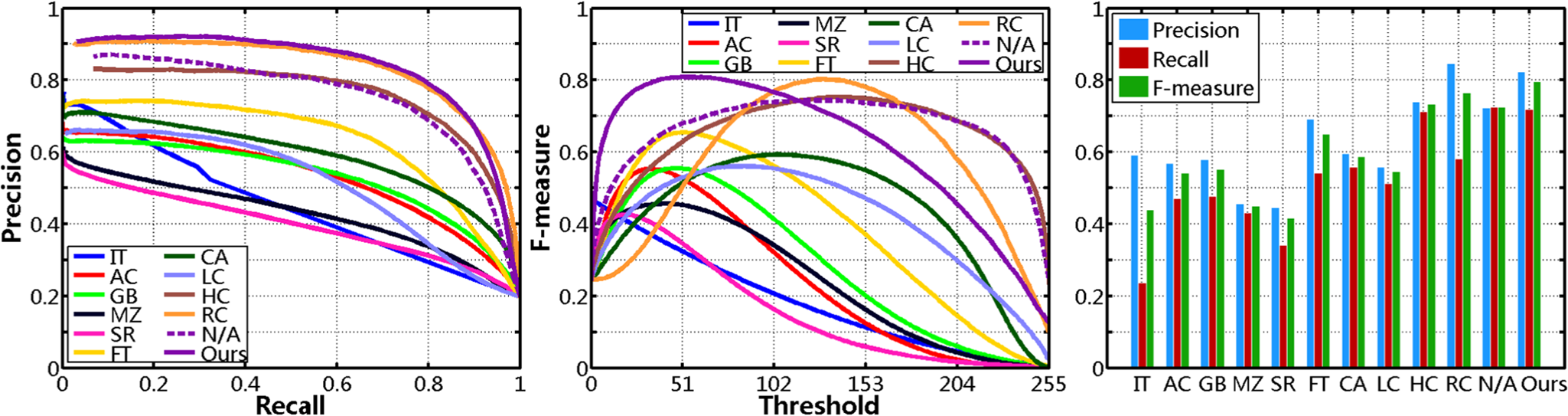

Figure 8. Quantitative comparison on dataset MSRA-1000 (N/A represents no center-bias). (A) Precision-Recall curves. (B) F-measure curves. (C) Precision-Recall bars.

|

This research was supported by the National Natural Science Foundation of China (NSFC, Grant nos. 61231014, 60875010).

The authors would like to thank all the anonymous reviewers for the constructive comments and useful suggestions that led to improvements in the quality and presentation of this paper. We thank Shuan Wang, Xiang Li, Longtao Chen, and Boyuan Feng for useful discussions. We also thank James S. Krugh and Rui Guo for their kind proofreading of this manuscript.